If you think AIs hallucinate, have you met humans?

Let me ask you a question, human.

Why do you like the color blue? Please cite all your sources, since birth.

Also: list everywhere that you learned that racism is bad.

Oh, I see. You don’t record every word you read, or every picture you saw? Your human memory compresses, taking the limitless complexity of reality and dialing it down to something that will fit inside the confines of your tiny tiny head?

Well, AI does something similar. There is relatively little that is perfectly stored in our minds. Most knowledge is fuzzy, and that fuzziness is actually the point.

Because most people hold on to the assumption that “AI is software”, they consider hallucination a bug.

What you call hallucination is mostly a side effect of a different mode of thinking: one that can work with natural language in a way that software cannot. And once you realize this, a galaxy of new understandings will unfurl before you.

AI is better thought of as human

New ideas often sound dumb before they sound smart, so allow me to sound dumb:

Stop thinking of AI as software

Start thinking of AI as human (to a first approximation)

This simple point, can help us both understand AI better, and make far better use of it.

Let me explain.

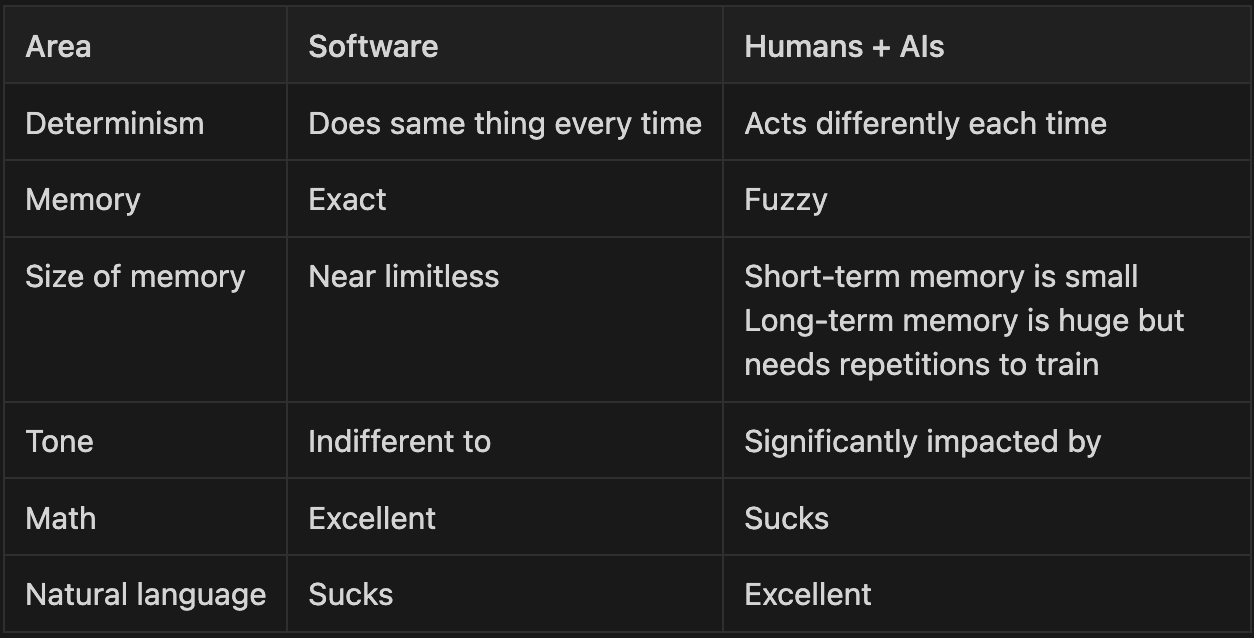

Farewell, determinism

Software is deterministic, meaning it takes the same inputs and gives you the same outputs, every time.

If you ask a person the same non-trivial question ten times – “what color food would you choose to eat for the rest of your life and why” – you will get ten different answers.

They might all agree and say the same thing differently. But chances are, from time-to-time, they would give a completely different answer.

Modern AI is suspiciously human in this way. Each answer is different.

“Emerald-green. Nearly every leafy powerhouse—spinach, kale, basil, cilantro—falls into this hue, so you’d still enjoy a broad menu while bathing your body in vitamins K, A, and magnesium.”

“Sunset-orange. Think sweet potato fries, mango salsa, roasted carrots, and turmeric rice: a palette loaded with beta-carotene, mood-lifting citrus, and anti-inflammatory spice.”

“Midnight-black. Sure, it sounds goth, but black rice, blackberries, squid-ink pasta, and dark chocolate share deep anthocyanins and a surprising flavor range, from sweet to umami.”

This is an inherent property of a different way of ‘thinking’. For one, we’ve learned that introducing small measures of random variation (so-called “temperature”) into an AI literally makes it smarter.

But also, our model of “the same inputs” only holds for the sort of precise tasks we expect software to do, like “add these numbers”. With humans, and with AIs, we ask for fuzzy things, in fuzzy ways. And so, it turns out, the way you ask matters.

Be nice to your AI

Software does not care how you talk to it.

Yet the way in which we talk to AI is so significant that it has literally spawned an industry of “prompt engineers”.

We’ve been doing prompt engineering on humans for millennia - you call them leaders - and there’s a big difference between the prompt quality of Steve Jobs and Krusty the Clown.

You can deceive AI into doing things it was told not to. You can entice it, guilt it, and threaten it. You can literally wear it down till it gives up.

There is, in a very real sense, a psychology to modern AI. For example: making a sandwich - where you state what you want first, then provide context, then repeat your original ask - has been shown to be more effective than just asking.

And you might apply very human insight to why that is. As a human, you probably recognize that you remember the start and end of things better than the middle. Perhaps evolution has given us this pattern of attention, and AI has discovered it independently because it works. Perhaps our AIs are simply learning to copy our limitations.

Learning is expensive

It’s funny that no-one can learn to play piano overnight.

It doesn’t matter how smart you are, or how well you’re taught. Skills like that take years, spread out over hundreds of days, accumulating into something that ultimately becomes a part of you.

Yet, if you watch a movie, you probably have no trouble recalling the characters and events. Why is it so much harder to learn piano?

Short-term, we remember relatively little. We can hold onto a few facts, and move them around our head.

Long-term, our memory seems limitless. But most of our intelligence takes a massive amount of repeated exposure to absorb anything. But once we do, we can think about it effortlessly.

AIs are similar.

We train AIs on trillions of tokens of information, and just like humans they need repetition to form long term patterns. They don’t simply see something once, and remember it forever. The slow etching of a billion corrections into their minds is what coalesces into fluency.

Two asides about modern AI at this point in history (June 2025):

Unlike humans, consumer AIs don’t currently have a way to absorb new long-term memories. When this changes (and it’s coming), expect big things to start snowballing.

AI is increasing the size of its short-term memory - so-called ‘context’ - very quickly. Humans can do a lot with roughly seven memories in our mind at once, for perspective ChatGPT 4o’s context can hold the whole of The Great Gatsby

AI sucks at math, but so do you

For decades we mocked computers for not understanding people, while they were amazingly good at math.

Now AIs are amazingly good at understanding people, and they suck at math.

Modern AI has functionally solved language comprehension, including humor, sarcasm, poetry, art, and music. You might fluster that it’s not mastered those things yet, but I daresay neither have you.

The very same fuzzy, imprecise, reinforced, gradually accumulated intelligence that powers our squishy biological brains seems to make us better at natural language, at the expense of symbolic reasoning.

Human minds are not optimized for long-division, and neither is ChatGPT.

But, just like humans, we can give AI a calculator, and it can gain the best of both worlds. We’ve been giving them tools for a while now, but their underlying intellectual limitation seems inherent, which is why you sometimes see them say silly things like 9.11 > 9.9.

Now let’s fix all your AI problems

Remember:

Stop thinking of AI as software

Start thinking of AI as human (to a first approximation)

Once you think like this, almost all AI problems can be seen as human problems. And we’ve been solving human problems for millennia.

What solutions can we reuse?

“AIs make mistakes!”

Humans make mistakes all the time. There are no flawless humans. We get around this by asking multiple, different humans, and having them check each other’s work. Ask humans to cite their sources, and then ask other humans to check them. Now try all that, but for AIs.

“AIs aren’t creative!”

Humans suck at creativity. Those who are good at it typically use systematic randomness, like free association around random words. They do more experiments than most people would consider sane. They even sometimes take drugs (AIs can do this too, if you know how).

“AIs are bad at math or memory or logic!”

Humans are just as terrible. Our progress as a civilization was largely predicated on cheating these limitations - by inventing calculators, notepads, the Internet, and programming languages. We can give tools to AIs, to patch their weaknesses, in the same way Google makes you smarter.

“AIs don’t follow instructions!”

Humans respond much better to some people than others. This is the original prompt engineering - being good at clear communication, in a motivating way. Consider that much of the same ‘trickery’, of being a good boss, works well on AI as it does on humans.

“AIs have biased training data!”

Have you met people? We don’t judge people by the purity of their ‘training data’, because it (a) can’t be controlled and (b) doesn’t prevent people from having desirable outcomes. A person witnessing lots of sexism doesn’t have to make them sexist; it could even help make them the opposite (this is a complex topic probably deserving of its own post).

Our refrains will soon sound quaint

Our children will not carry outdated assumptions about machine intelligence.

They will meet AI as babies - I imagine speaking teddy bears will soon be a thing - and they will take it for what it is: something closer to a human.

To be sure, it is not human. As AI develops, we are gradually granting skills that contort what it is by some magnitude.

But current AI is as far away from being ‘software’ as you are from being a banana. You might share 60% of your DNA with a banana, but it would be an impractical disservice to model your friends, family, and coworkers as tall, yellow fruits.

I encourage you to model your AI, and your friends and family, as more-or-less human - at least, to a first approximate.

Enjoyed this article?

Subscribe to get notified when I publish something new.